Part 1: Intro to basic prompting techniques

The intention is to use more token budget to generate a single solution. Traditionally, standard prompting performance is poor on reasoning benchmarks before the advancement is post-training techniques, standard few-shot exemplars only provide information on the final solution format but not the rationale to derive the solution.

Chain-of-thought prompt provides a thinking process, CoT performances improves more significantly in following areas:

Increasing of model size

Model Performance

How to improve CoT performance without manually labeling exemplars? Use “Analogical Prompting” to instruct the LLM to generate example.

Exemplars are self-generated by LLMs, no manual labeling

Exemplars are tailored to individual problems.

Analogical prompting outperforms 0-shot CoT and manual few-shot CoT.

Among different instructions, “Let’s think step by step" has highest accuracy to work for CoT generation. Chain-of-thought prompting provides variable computation of the thought process adapting to tasks of difficulty levels.

To improve CoT performance at inference time

Few-shot prompting with labeling of thoughts

Instruction prompting to trigger CoT generation

Instruct LLM to automate prompt design

Part 2: Search and selection from multiple candidates

Increase the width to explore the solution space.

We should not limit LLM to generate only one solution per problem, instead, exploring multiple branches.

Generate multiple candidate solutions per problem

Generate multiple potential next reasoning steps given the current (partial) thought.

But how to select the best response from multiple candidate?

Consistency is highly correlated with the accuracy.

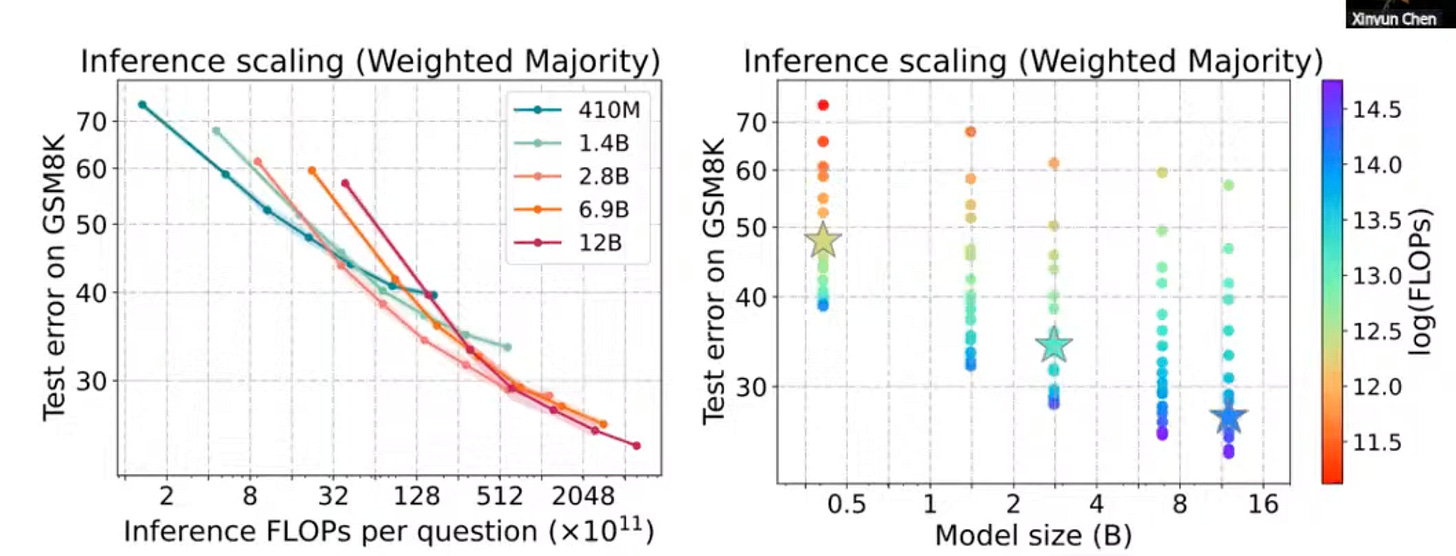

Tree of thought with bread-first-search(BFS) scales better than standard prompting and CoT w.r.t token budget. We can even integrate more advanced search algorithm, like MCTS.

Further scale the inference-time compute by sampling multiple branches in the solution space.

Consistency-based selection

When LLM self-evaluation works well, search in the partial solution space can help.

Part 3: Iterative self-improvement

Increase the depth to reach the final solution

Even if sampling multiple solutions can reduce mistakes from a single prediction, but it is still suboptimal, since there’s no feedback loop to correct the mistakes after a complete solution is generated.

Inference-time self improvement means LLM iteratively improves its own response for the given task.

Reflexion and Self-Refine:

LLM generate feedback on its output. Use external evaluation when available

LLM self-refines its output given both internal feedback and external evaluation.

Self-debugging with different feedback formats

Multi-agent debate does not improve over self-consistency

At the end,

General Principle of How to Design Effective Reasoning Technique

The bitter lesson from Richard Sutton is an important guideline for designing reasoning techniques, including both inference-time and training-time algorithms.