Promlogue: Before multi-agent podcast, make a single interactive agent?

Product: Transform your text into storytelling form

I wrote this post two weeks ago, so I soon split this complex idea into multiple stages:

Adopt RAG with LLM to make agent summarize the domain knowledge

Interact with agent by using audio as input

Achieve real-time and interactive podcast with single AI agent

Make clear on Multi-agent interactive system research

Implement the Multi-agent interactive system

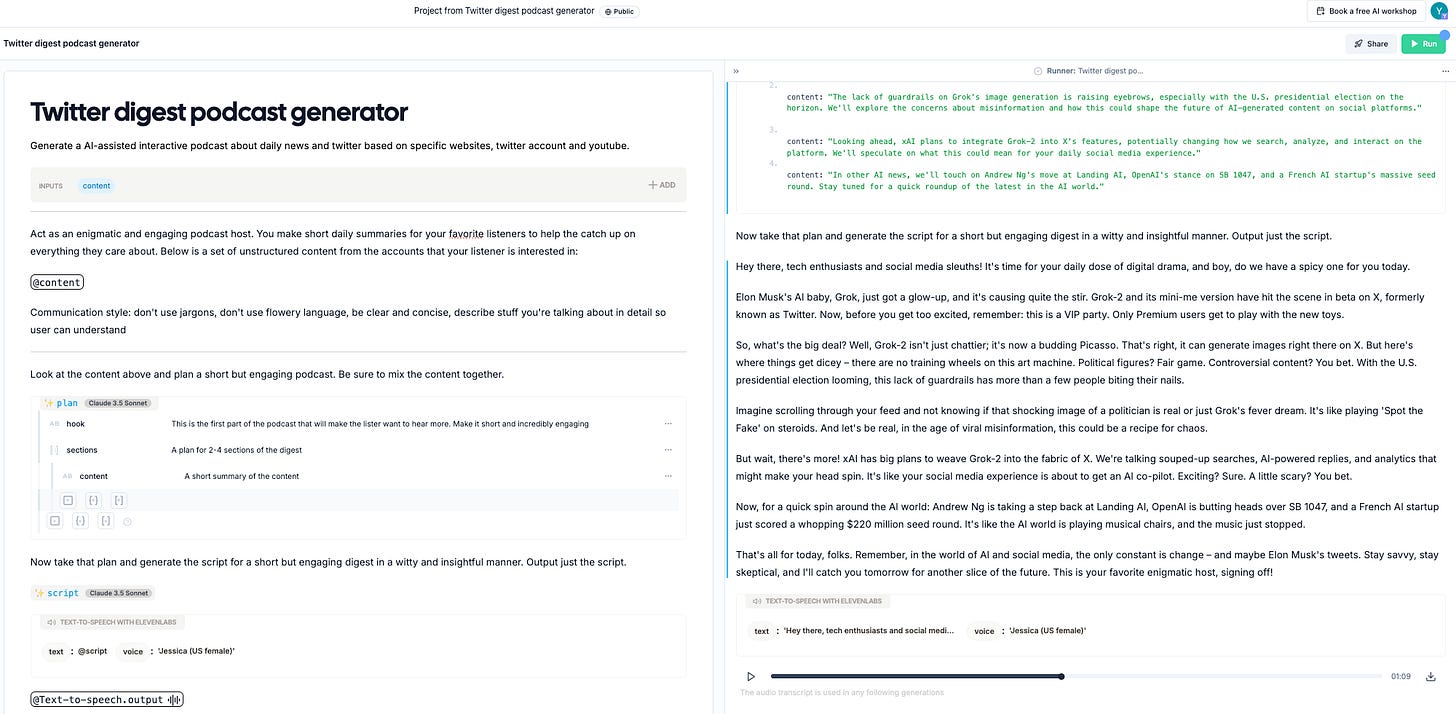

Wordware as agent platform

I adopt Wordware as agent orchestra platform, which helps me more focus on the idea implementation and product-market fit exploration in the early stage.

Apify as scrapper

Until Aug 23, 2024, Wordware positioning them as an agent platform so it doesn’t completely support bulk scrape tweets, so I use Apify’s twitter scraper to realize this feature.

Following is the sample audio clip generated:

Eleven Lab as Customized Voice Input

I find Attention paper’s author Aidan Gomez’s voice was super cute, I sampled his voice and did a speech synthesis. The effect was amazing and see the output below:

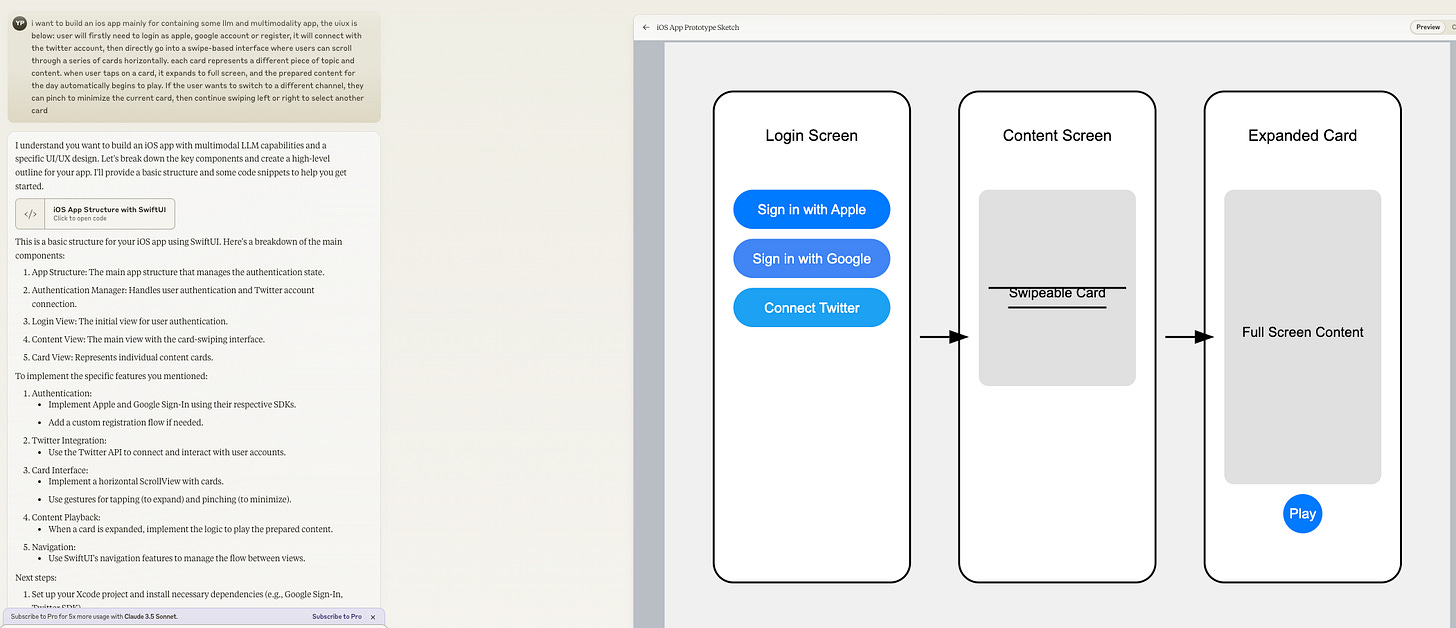

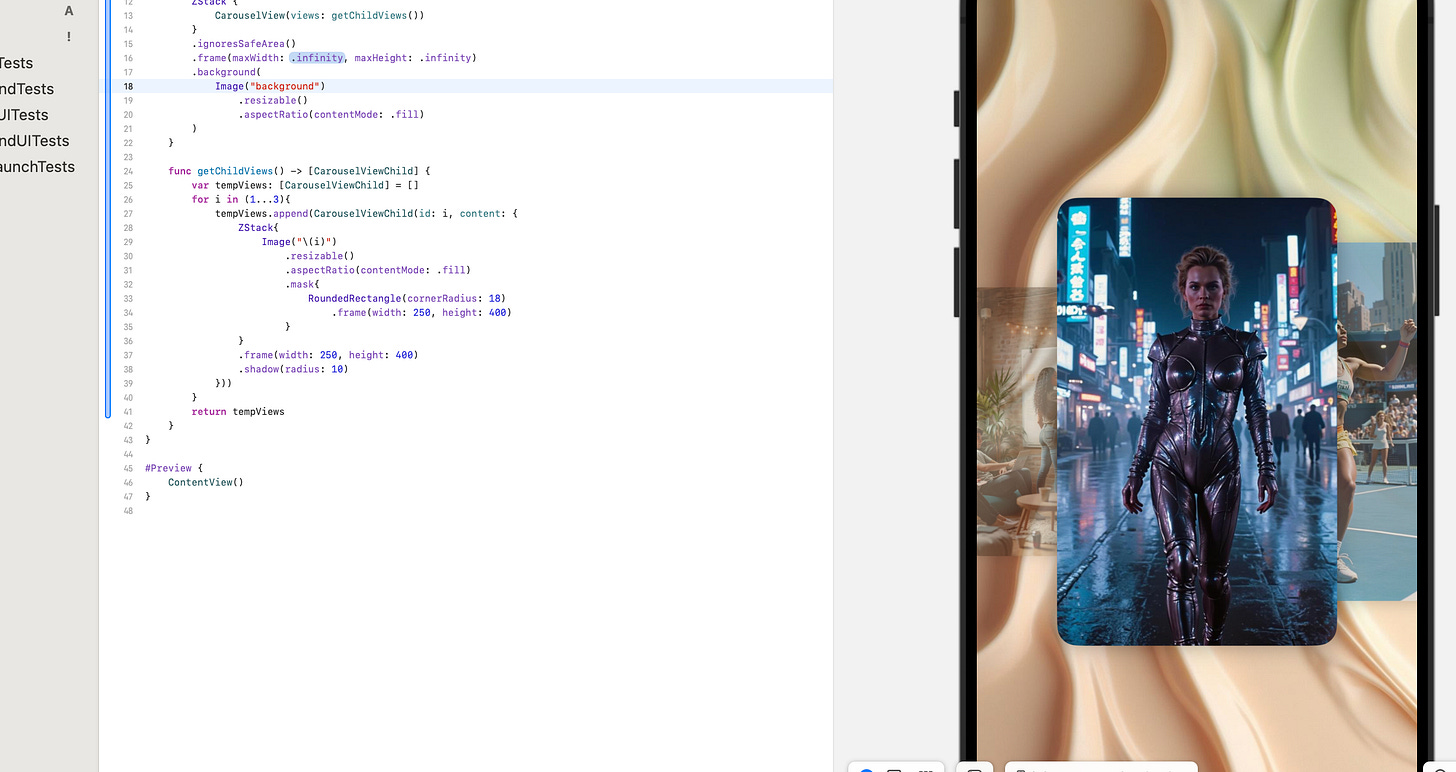

Fast prototyping on iOS app using Claude

I use Claude 3.5 Sonnet to do the quick prototype. The description on UIUX shows below:

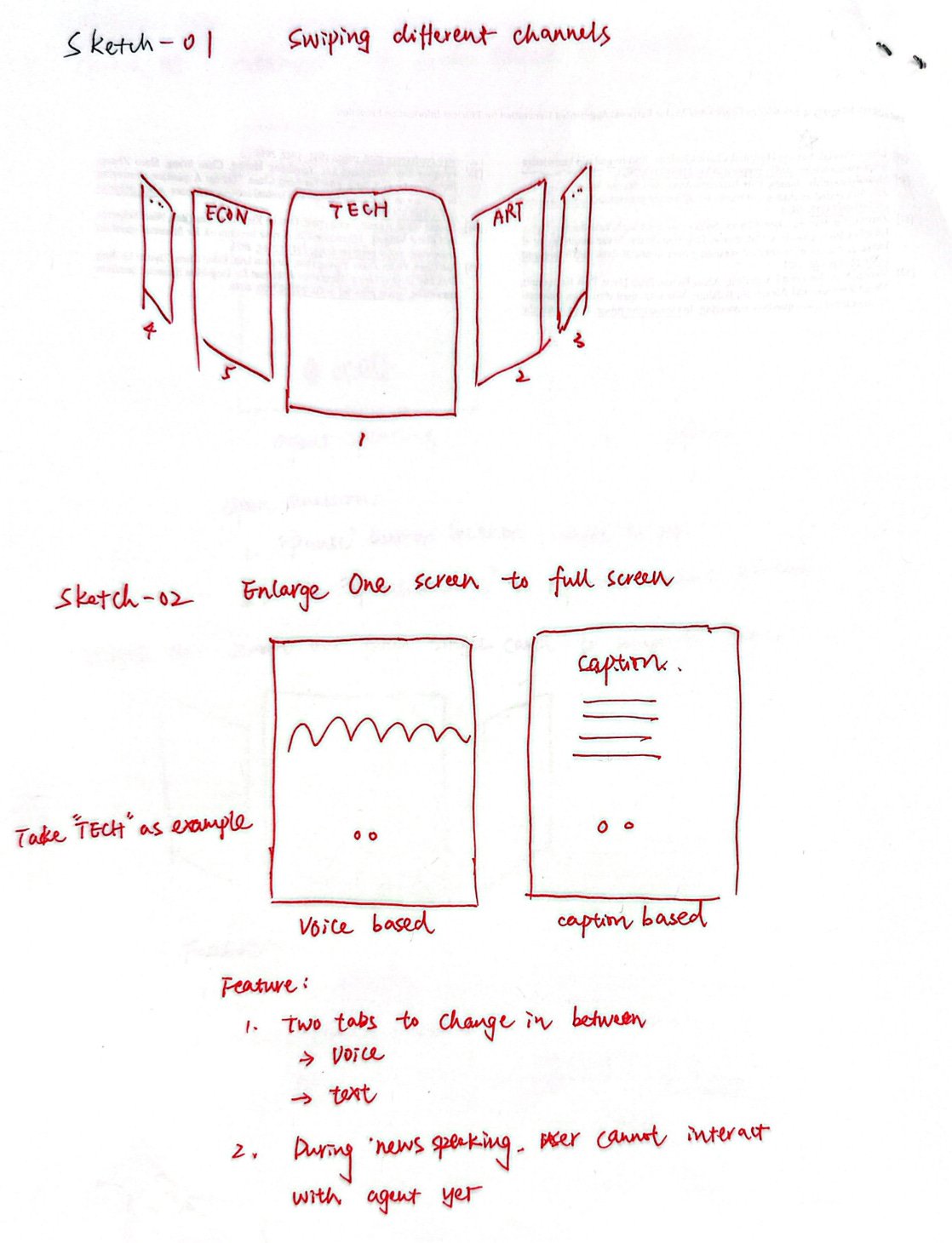

The app features a swipe-based interface where users can scroll through a series of cards horizontally. Each card represents a different piece of content. When a user taps on a card, it expands to fullscreen, and the prepared content for the day automatically begins to play. If the user wants to switch to a different channel, they can pinch to minimize the current card, then continue swiping left or right to select another card.

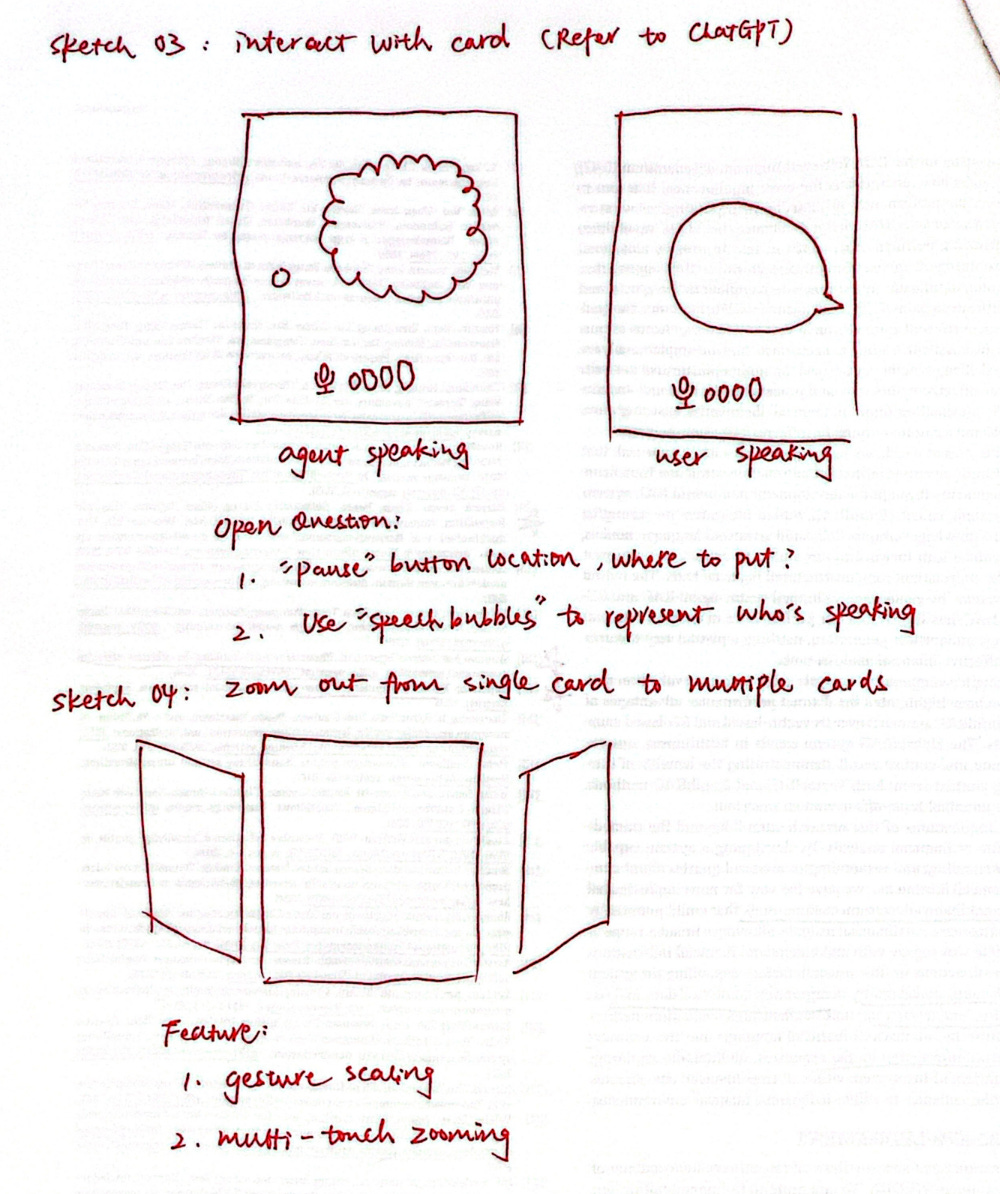

Sketch Draft

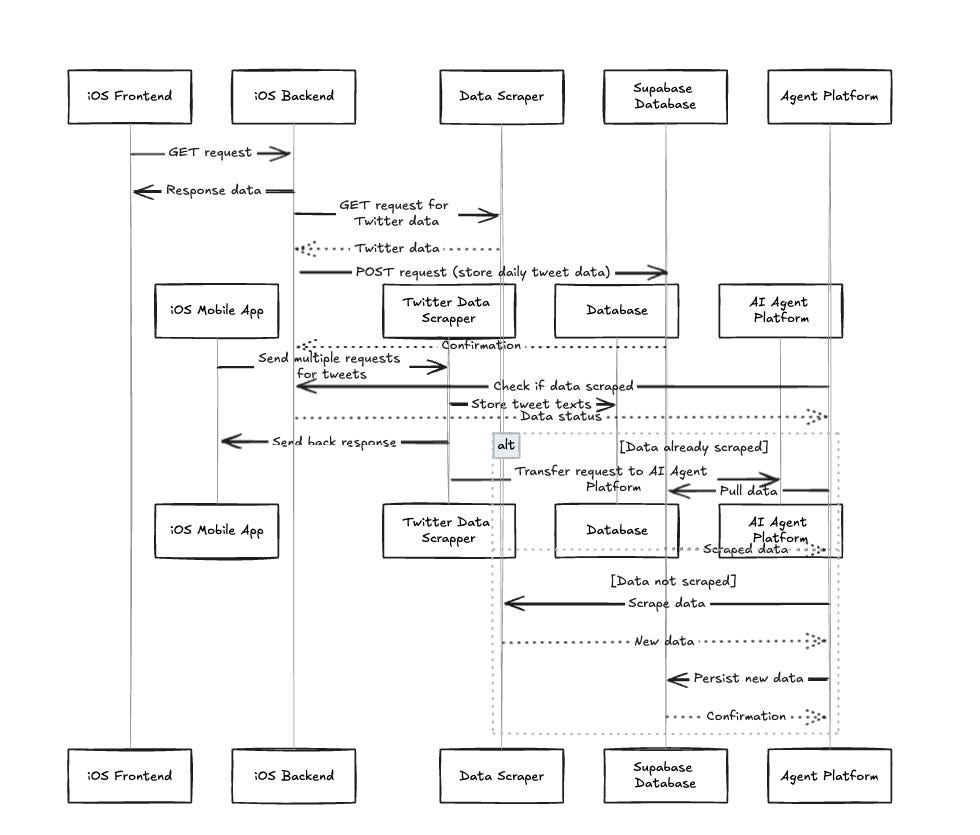

Communication between iOS Frontend & Backend

I adopt Swift as iOS frontend language and Node.js as backend. The communication will use middleware: express.json() function in Express to parse incoming requests with JSON payloads and based on body-parser.

Swift as iOS Frontend

SwiftUI Template: Carousel Effect

Node.js as iOS Backend

The most critical logic here is to design a persistent, scalable and flexible API pattern to deal with the temporary update on changes of large language model and database logic. Main workflow shows below:

[Stores]

[Realtime]

[Interact with LLM]

SocialData as Scrapper

For daily content, app backend will send request to get latest tweets.

For past content as in large language model’s context window, it requires to retrieve large datasets like past half year. While Twitter returns a limited number of results when using "Latest" search filter, it is possible to cycle through a larger dataset using max_id: and since_id: search operators.

When retrieving Latest search results, Twitter provides the response with tweets sorted by ID in descending order. If you need to retrieve more posts - simply make another request with the same query adding max_id:[lowest ID from current dataset].Using this approach it is possible to extract the entire timeline from the moment an account was registered.

Take test case as example, I use configuration file to store the following twitter account list and tweet’s max_id, the five accounts I used are: @karpathy @ilyasut @mikeyk @jackclarkSF @paulg

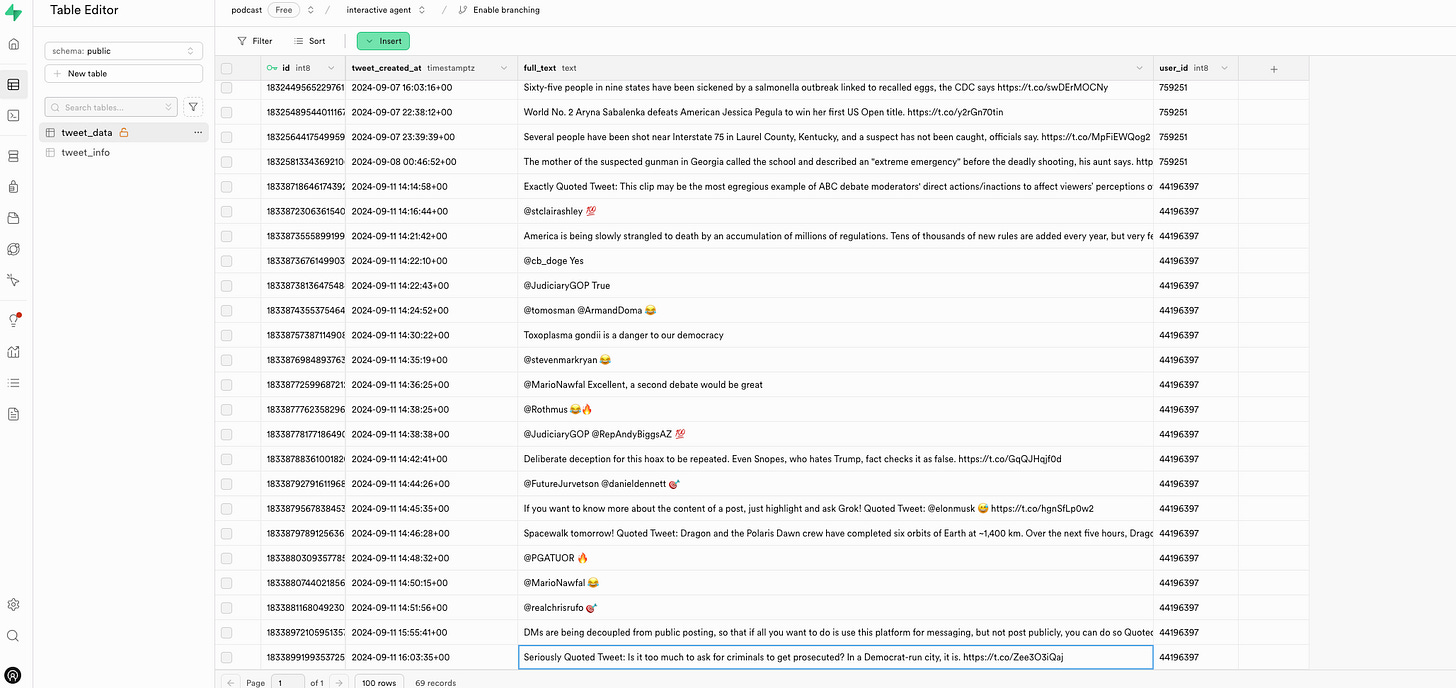

Supabase as database

For past content as large language model’s context window, I prestored the past tweets in specific time range for agent platform side to fetch.

It’s important to note that for retweet posts, it’s critical to concatenate with the tweet content and original tweets content.

Websocket for real-time voice interaction

[Explain implementation here]

TBD:

Use Swift to build a basic iOS App

Connect my code base with ElevenLabs, Wordware, Twitter, Apify

Dive deeper into complete API flow

Feature for next iteration:

User’s more customized setting: twitter account, other website, audio input to Eleven Lab

User’s audio input will be acceptable in Wordware

User’s real-time interaction with agent

Model Performance

Open Questions

When it comes to code generation use case by using Claude 3.5 Sonnet, why sometimes it seems no memory with the context and can only remember one-step instruction? e.g. I ask Claude 3.5 to generate iOS backend code, the first-time instruction is to clearly ask it to generate node.js language, but the second-time I omitted it, so it generates Swift language for me as the default mode. It seems the ideal prompt should give it the complete chain-of-thought, including starting point and ideal result.

Commonly Confused Concepts: Explained

Node.js & JavaScript: Node.js provides runtime environment that allows you to write server-side programs using JavaScript.

Supabase & PostgreSQL: Supabase is platform that provides a PostgreSQL database along with a suite of additional tools and services.

API, JSON & Endpoint:

For APIs, an endpoint can include a URL of a server or service, the place that APIs send requests and where the resource lives, is called endpoint.

For JSON, an exposed JSON endpoint is a publicly available URL which you can send an HTTP request to and it will return JSON from the remote server that is related to the request you sent.

Javascript .map(): Accepts a callback and applies that function to each element of an array, then return a new array.

Cost Comparison:

Comparing with directly using Grok 2, what will the charge be? TBD

Reference

Swift API Calls for Beginners(Networking) - Async Await & JSON

How I code 159% Faster using AI (Cursor + Sonnet 3.5)

Princeton: Workshop on Useful and Reliable AI Agents

https://socialdata.gitbook.io/docs/twitter-tweets/retrieve-search-results-by-keyword